MX500 Design and Features

Crucial 8th Gen SSD includes the following major features:

- The world’s smallest 256-gigabit/64 Gigabyte die (59 mm2), utilizing floating gate NAND and designed with CMOS Under the Array (CUA)

- Dynamic Write Acceleration for faster saves and file transfers - Adaptive pool of NAND run in SLC mode to ensure fast writes

- Hardware-based encryption (SED) to keep personal files and confidential data secure - The Operating system can use the drives own encryption process rather than spend CPU cycles and memory doing encryption on the host system

- Integrated Power Loss Immunity to avoid unintended data loss when the power unexpectedly goes out.

- Exclusive Data Defense to prevent files from becoming corrupted and unusable.

- Redundant Array of Independent NAND to protect data at the component level. RAIN provides Parity protection of data.

- Online migration/installation tools as well as Acronis True Image 2017 cloning software.

Power Loss Immunity Feature

Better SSDs both old and new include power backup circuitry to protect the drive from sudden power losses, allowing the drive to complete its saves and any background I/O successful, improving data integrity.

This usually takes the form of large capacitors or a bank of capacitors, providing a 'buffer' for power. Battery and Super-Capacitor backup is a standard feature in enterprise servers, protecting high end RAID or Storage controllers, SSDs and Flash arrays. For a SSD, the function only needs to provide power for milliseconds, allowing the drive to finish what its doing and shutdown. For enterprise gear the power backup is supposed to keep power for longer periods, sometimes days/weeks depending on the product especially if it has some sort of memory onboard.

Typically for SSDs this feature was only marketed for enterprise products, where sudden power outages , sudden power restores and surges in power are present and the device needs to handle this, to protect not only itself but data integrity. Not such an issue for consumer/client drives but the Marvell based MX300 did have a very large capacitor bank on it.

Through the marketing for the MX500, Cruical indicated the new 2nd Gen 3D NAND is better at this too .They have been vague in technical details about how the function works other than saying they need less capacitors, and our photo of the MX500 board shows almost a complete lack of power storage caps. Crucial is calling this Power Loss Immunity, not Power Loss Protection.

Crucial claims Power Loss Immunity works in two ways, first moving some of the power protection circuitry into the NAND itself. Given the NAND needs power to do reads and writes, the ability to control how long that charge remains in the NAND and how it discharges can help with data retention. Secondly Crucial claims they changed the programming of how data is written to the NAND. By spending less time writing, more of the bits become 'at rest' rather than 'in flight' and less active power protection is required due to the data already being secure in the NAND, hence the term that the SSD is immune to power loss rather than requiring protection.

When I asked Crucial on how to test the feature, if pulling the plug was the typical use case we received this response:

If you want to test the efficacy of this feature, the only way to do it is to pull power asynchronously during normal operations, particularly writes (or saves), and then check the integrity of previously saved data. It really is that simple. It must be noted that the protection is for Data-at-Rest, Not “Data-in-Flight”.

Recalling our mention of how TLC NAND works, with 3 bits of data per cell and that it takes extra access or sorting steps to read or write the exact data that you want from that cell, or 'bucket' . Crucial are stating that that they solved this problem with the new hardware. Yet it only covers data at rest not data in flight. We would need a much more comprehensive backup solution to protect data in flight, such as larger batteries, supercaps backing a memory cache (which would hold the in-flight data) For reference, Dell use a 720 mAh lion battery on their current PERC RAID controller cards

To test this feature, I setup a file copy process between an iPhone and the test PC consisting of numbered photos and then pulled the power on the MX500 system at a particular point. The system was then restarted and the copied file count was observed and compared to the point the power was pulled. For accuracy I will flip the power switch on the PSU. Note that the NTFS file system provides its own layer of protection against power induced data loss especially if its large contiguous files. For example NTFS will only commit a file once it deems it complete, partial files are discarded.

I did a simpe file copy from the phone to a folder on the desktop, selecting 500 numerically sequential photos which included a few large video files. I flipped the power switch at the 250th image to replicate a typical power loss situation where someone would be copying files from their device in this manner.

Upon restoring the power and booting back into Windows 10, CHKDSK did not run, ie the disk was not marked as dirty but queued windows updates for the current month did complete pre-login as network was present. Windows rebooted itself after the updates and i proceeded to check the integrity of the copied files.

I flipped the power switch at approx IMG_1252 out of IMG_1000 to IMG_1500. iPhone photos are several MB each so I can time reasonably accurately. On disk, files IMG_1250 and 1251 were copied correctly but IMG_1252, a 1MB video file was corrupt. The file size was correct but the file was corrupt for sure, comparing to the file existing on the phone.

Given this result I am not sure what to think about this, the last file 'in flight' being corrupt looks to me no different to any other drive losing power. I am generalising here and basing this ocmment on my experience of using hundreds of different disk drives. Newer operating systems, disk controllers and SSD/HDD drives are more tolerant to power outages than really old hardware going back 20 or more years.

To accurately gauge Crucial's claims I would have to setup monitoring of the physical power rails on the SSD which is not possible at the moment, besides this claim reads similar to some claims made by the controller vendor, Silicon Motion on their website - where they make general claims about data security and integrity.

I tested the power outage features to the best of Crucial's description, not much more I can do on this front at this time, may worth being revisited in future such as trying simultaneous IO operations rather than an almost idle system just copying files from a external storage. The feature is intended to protect data that was already stored in the flash memory from being lost when the controller is reading and writing back that data.

It is impossible to write protect data "in flight" 100%. If the operating system has not finished a file operation, there is not much the underlying hardware can do.

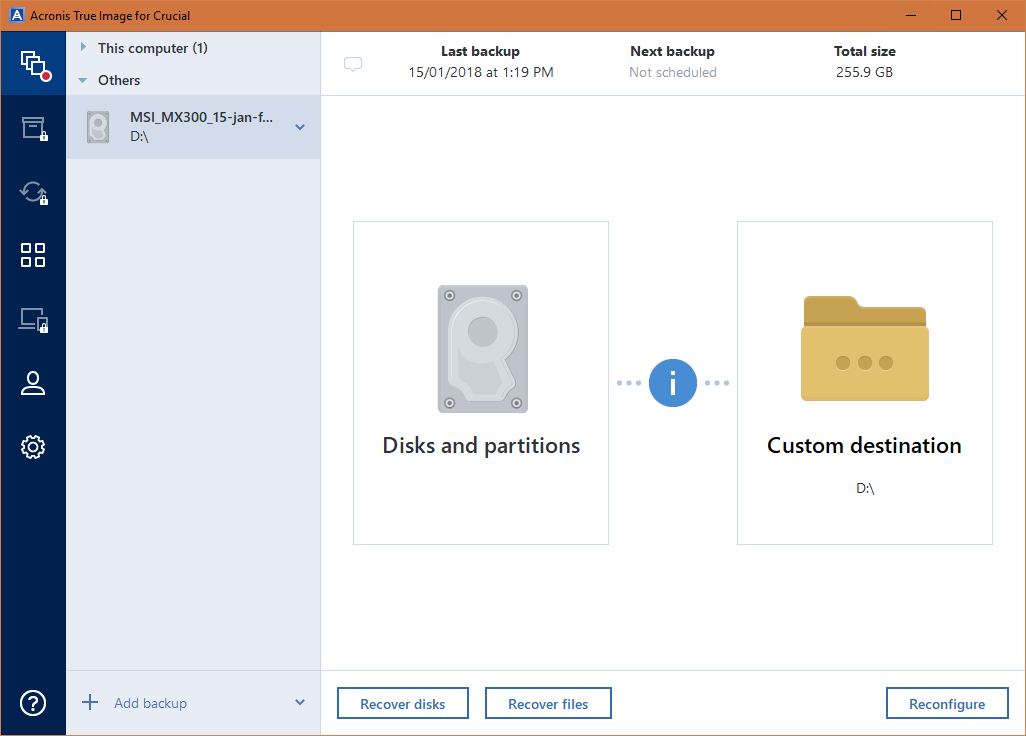

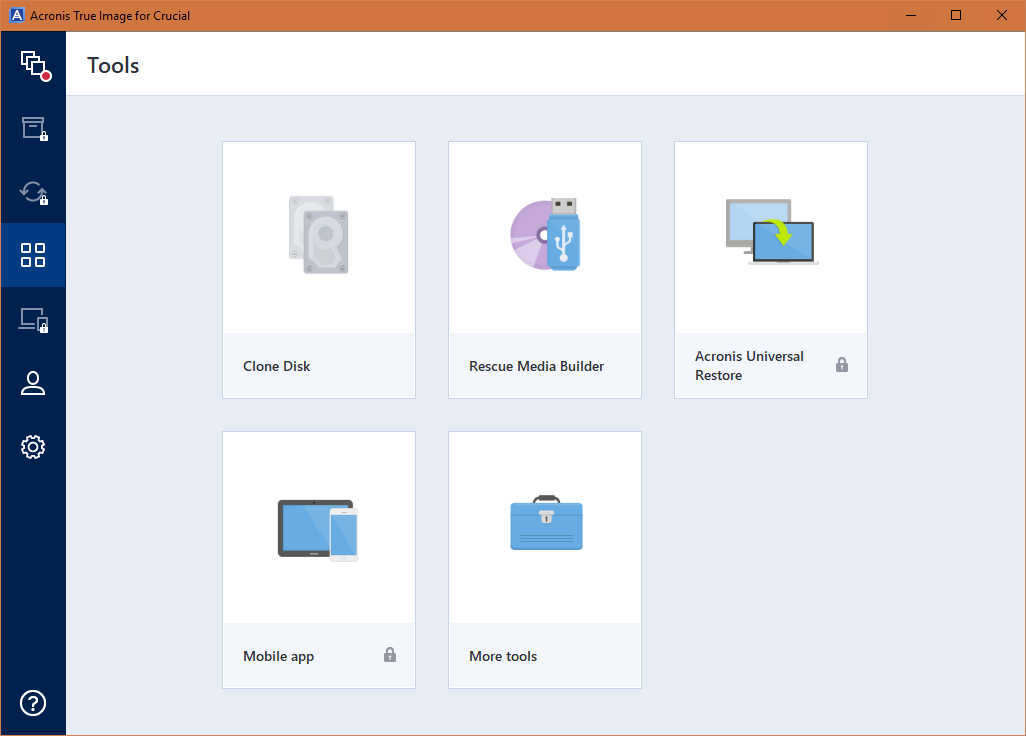

Bundled Acronis True Image 2017 cloning software

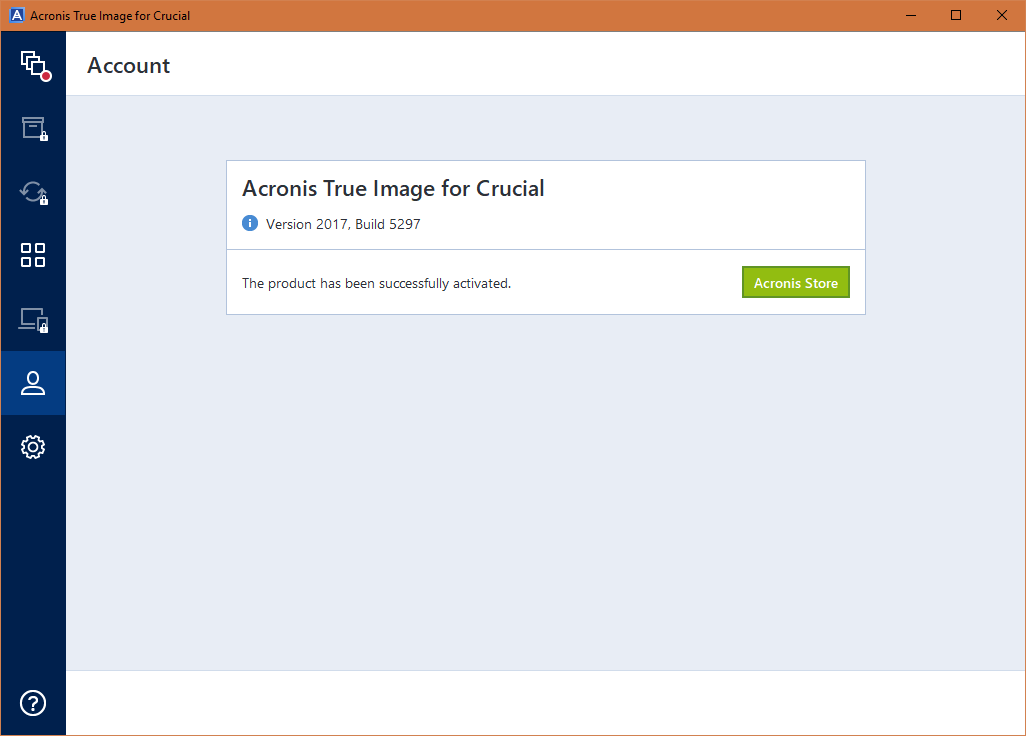

Crucial is one of the few vendors who bundle a vanilla version of Acronis, and not a proprietary tool that integrates the True Image engine. to Use the software you simply need to download the Crucial specific version using the provided link and have at least one Crucial drive present in the system. It is not restricted to copying to/from the Crucial drive. Previous drives bundled a cd-key which activated the software but this wasn't the case for the MX500.

What is not widely known is Crucial and Acronis silently update the bundled version to the latest version, the version 'bundled' with the MX500 is based on True Image 2017 Build 5297 and includes such updates as native backup image mounting/handling and it correctly preserves system reserved partition sizes when cloning between different sized drives no matter going small to large or large to small, which is appreciated and works perfectly. Although the OEM version is missing features from the full version, it has enough features to allow the user to keep an offline archive of backup images, and restore /clone them between different systems at will.

Archiving, Sync, Universal Restore, mobile app/backup and cloud functions are not available in the OEM version.

Restoring our 370GB test image stored on a 3TB HDD to the MX500 took 30 minutes using our test system. This works out to approx 200MB/s which is the peak speed of the 3TB Seagate HDD where the backup images were stored.

The importance of the SSD controller in 2018

In a nutshell, the controller chip that powers the SSD is becoming less and less important for SATA interface drives especially that the SATA interface itself became a bottleneck some years ago.

Bottlenecks are one thing, but these controllers typically use commodity CPU cores are part of their designs, where dual and even triple core embedded class ARM cores can be commonly found.

Additionally, the flash interfaces on these controllers/SoCs have matured over the years and generations. Using the Silicon Motion chip found in the MX500 as an example, it has universal capability to interface with the latest flash standards of each vendor of Flash memory.

SSD controllers across the board have achieved near parity and it is for this reason that marquee companies like Intel, Crucial-Micron, Kingston, Toshiba-OCZ and others use third party controllers for the majority of their SSD products. Samsung and Apple are two unique cases where Samsung design their controller in house and Apple bought out a controller design company and now make their own in house.

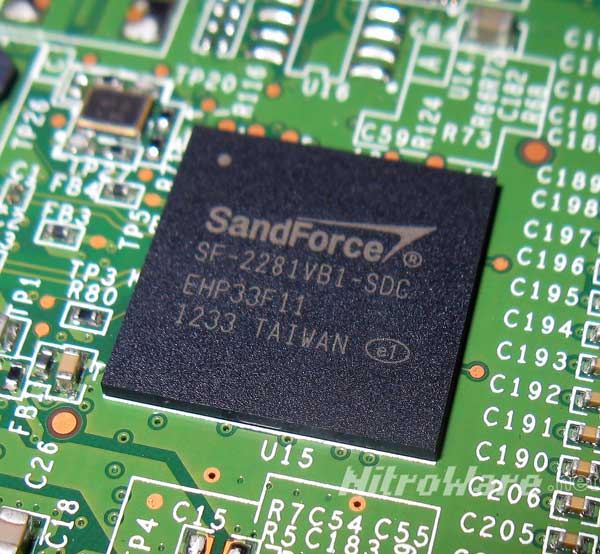

While Intel use their own in-house controller for their enterprise SATA, enterprise NVME and Optane SSDs, their consumer and client drives have used off the sheld third party SSD controllers, ie the 520 we tested using SandForce and the just released 760p mid market client NVME drive using Silicon Motion. Between Intel, Kingston, Toshiba-OCZ, Seagate and Corsair we also have LinkaMedia (LAMD) and Phison controllers.

SSD controller technology has matured and plateaued due to the limitation of the SATA 6 Gigabit/s bus for sequential read/writes and for IOPS. We have seen maximums of 560MB/s and 100K IOPS on several current SSD controller chips and unless some magic IP is introduced such as what SandForce did some years ago in their controller by compressing data on the fly (as is the case with the Intel drive in this review) there is not much that can be done other than provide better quality of service for SSD performance and naturally decrease cents per GB with more denser and cheaper flash memory chips.

What I mean by quality of service for a SSD is good or better performance across the board, or spectrum of operations of the disk drive, small transfer sizes to larger sizes. In other words more consistent performance and a more efficient product. The end result we want to see is linear performance reported in benchmarks and low latency. If at a larger transfer size or if the disk is in a particularly heavily loaded state, whether it needs to process a lot of simultaneous data or the disk is nearly full, these scenarios can deliver worse quality of service for a SSD.

A cleverer and more powerful SSD controller can give us better efficiency and performance across the range of IOs but it wont deliver a revolution in performance only small evolution. It is relatively trivial to make a flash controller chip, there are dozens for USB flash drives. It is less so for a SSD using multiple flash memory ICs in an array but this technology is now well understood.

Industry focus has been on chip stacking and density such as 3D NAND from Intel-Micron Flash Technology (IMFT), Sandisk (WD), Samsung and Toshiba which not only increases density but also allows performance. These developments give the consumer the biggest quantum leap in storage and more so when QLC level Flash becomes mainstream.

Later in the benchmarks section of this review you will get a visual indication of this by comparing the graph curves between the different SSDs, you don’t necessarily need to understand what the graph is reporting, but take note of the smoothness of the plot curve. A smoother curve without blips is better and will deliver more consistent performance.